Nicolás Raths (Mainz): Reading and annotating concordances with AI: A pilot study

Datum: 21. März 2025Zeit: 11:30 – 14:00Ort: Kollegienhaus, Universitätsstraße 15, 91054 Erlangen

Join us for the RC21 Project Symposium, where invited speakers and project team members, Poster Presenters will present their work on methodology and applications of concordance analysis!

Nicolás Raths (Mainz): »Reading and annotating concordances with AI: A pilot study«

Abstract:

Manual annotation of concordances is (arguably) the most accurate way of coding linguistic data. However, the accuracy and velocity of human annotation may suffer depending on the number of factors, how interconnected these are, which aspect of grammar they correspond to and how far away from the keyword these are expressed in the sentence... Fully automatized systems, on the other hand, allow for large quantitative analysis, but they are dependent on processing power, training data, fine-tuning, etc. and often involve monetary cost. This poster presents the results of a pilot study which explores the potential of a low-cost AI method to improve the speed and accuracy of the human reading and annotation process. As a trial context, it uses if-conditionals.

An exemplary concordance line containing an if-conditional may look like (1) in an untagged corpus. The first challenge is to spot the actual if-clause and corresponding main clause, which then has to be annotated for factors such as mood, tense and aspect in the if-clause, as well as in the main clause, the semantics of the conditional (e.g. habitual, hypothetical or counterfactual) and position of the if-clause (pre-posed vs. post-posed).

(1) ossed destinies for the Senora. The Holy Catholic Church had had its arms round her from first to last; and that was what had brought her safe through, she would have said, if she had ever said anything about herself, which she never did,-- one of her many wisdoms. So quiet, so reserved, so gentle an exterior never was known to veil such an imperio In order to streamline the workflow, a targeted python script was created and employed with the following tasks:

- Loading concordances from a dataframe

- Validate the starting row (allowing to repeat/continue at user’s will)

- Access a specific Large language model (Grok in this pilot study)

- Present the prompt that will be sent as instruction for the generative AI

- Provide Grok the previously specified row from user’s dataframe

- Repeat for X times

- Provide Grok with instructions to generate its output in a JSON format

The output of the AI looks in part as follows:

(3)

"If clause": "if she had ever said anything about herself",

"Main clause": "she would have said",

"Main clause modal": "would",

"Tense or modal if-clause": "Past Perfect",

"Tense or modal main clause": "Conditional Perfect",

Potential issues that were encountered:

- The development of adequeate prompts that depend on user’s knowledge of the topic

- Grok was not specifically trained for linguistic analysis

- Keep the AI from paying attention to the content and focus on structure

- Give AI time to compute

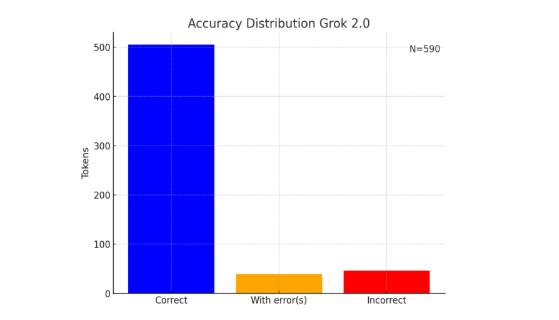

After evaluating the output of N=590, Grok achieved the following accuracy:

In addition, this approach allows easier readability for comparison/correction and modular factor accuracy rating. The results of my preliminary study point to a great potential of combined automatized and manual linguistic annotation.

References:

xAI (2024) Grok-2 (Aug 13 version) [Large language model]. https://x.ai/x.ai

Details

Kollegienhaus, Universitätsstraße 15, 91054 Erlangen